Most devs don’t understand how context windows work

Matt Pocock

49,437 views • 6 days ago

Video Summary

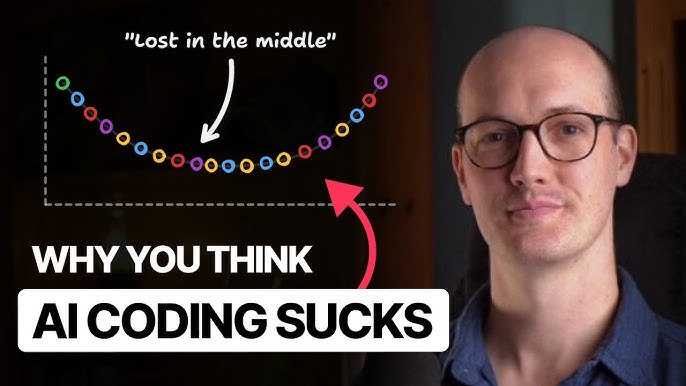

The debate surrounding AI coding agents often centers on their efficacy, with one side deeming them ineffective and the other asserting that users simply employ them incorrectly. The primary constraint highlighted is the context window, which encompasses all input and output tokens an LLM processes. Developers often lack a comprehensive understanding of how this window functions and its impact on agent performance. Each LLM has a hardcoded limit for its context window, typically measured in tokens, which can be hit by either numerous input messages or a single, exceptionally large input like document uploads or transcriptions. Exceeding this limit results in an error from the LLM provider. A key challenge with larger context windows is the "lost in the middle" problem, where information positioned in the center of the conversation or input is deprioritized by the LLM's attention mechanism, impacting retrieval and performance. This leads to a degradation in effectiveness as more information is fed into the model.

To manage context window limitations and improve performance, developers can regularly clear chat histories, effectively refreshing the agent's "memory" and providing a clean slate. Alternatively, some agents offer a "compact" function that summarizes the conversation, reducing token usage while attempting to preserve the essence of the dialogue. However, this process consumes tokens and LLM resources for the summarization itself. The speaker emphasizes the importance of maintaining a lean context window, cautioning against the bloat caused by external tools or extensive custom rules, as this can significantly diminish an agent's ability to effectively utilize information. Ultimately, understanding and managing the context window is presented as crucial for achieving optimal performance with AI coding agents, with a strong emphasis on the model's retrieval capabilities rather than just its size.

Short Highlights

- The primary constraint for AI coding agents is the context window, which includes all input and output tokens.

- LLMs have hardcoded context window limits in tokens, and exceeding them results in errors.

- Larger context windows can lead to performance degradation due to the "lost in the middle" problem, where information in the middle of conversations is deprioritized.

- Strategies to manage context include clearing chat histories or compacting conversations into summaries.

- Effective LLM assessment should focus on information retrieval from the context window, not just its size.

Key Details

The Context Window: A Core Constraint for Coding Agents [00:22]

- The context window represents the entire set of input and output tokens an LLM processes.

- Input tokens include system prompts and user messages, while output tokens are the LLM's responses.

- As conversations grow, the number of tokens within the context window increases, eventually hitting a hardcoded limit set by the model provider.

- This limit can be reached through numerous messages or a single, very large input, such as uploading documents or transcribing lengthy content.

"The context window is the entire set of input and output tokens that the LLM sees."

Model Limits and Performance Degradation [02:20]

- Models like Claude Haiku 4.5 offer limits of 200,000 tokens, while Gemini 2.5 Pro boasts up to 2 million tokens.

- Smaller or older models, such as Quen Math Plus, may have significantly smaller context windows, around 4,000 tokens.

- Context window limits exist due to architectural constraints, memory usage, and, critically, performance degradation with increased context.

- Larger context windows lead to worse performance because models struggle with retrieving specific information, akin to finding a "needle in a haystack."

The "Lost in the Middle" Problem and Attention Mechanisms [03:30]

- All LLMs suffer from the "lost in the middle" problem, where information located centrally within the context window is deprioritized by the attention mechanism.

- This means information at the beginning and end of a conversation or input has the most impact, while middle content is less influential.

- This emergent property mimics human cognitive biases like primacy and recency bias.

- Shorter context windows inherently reduce the likelihood of encountering these "lost in the middle" issues, as models perform better with focused information.

"The stuff at the start of the conversation is going to have most impact and the stuff at the end is going to have the most impact. But all the big bloated stuff in the middle is not necessarily going to impact the result that strongly."

Managing Context in Claude Code [05:10]

- Tools like Claude Code allow users to monitor context usage, displaying tokens consumed by system prompts and conversation messages.

- Regularly clearing the chat history is recommended to refresh the agent's memory and free up the context window for better performance.

- An alternative is to "compact" the conversation, which summarizes past messages to reduce token count while preserving the conversational essence.

- While compacting can help mitigate "lost in the middle" issues and preserve conversational context, it consumes tokens and LLM processing time for summarization.

"Compacting is useful when you want to preserve the vibes of a conversation. But clear is should be your default when you just want to clear it."

Cautionary Notes on Bloat and Assessment [07:09]

- MCP servers, while offering convenient toolsets, can rapidly bloat the context window, with system prompts and tools consuming significant portions of the available tokens.

- The speaker advises extreme caution with MCP servers and large custom rules to maintain a lean context window.

- When assessing an LLM, it's crucial to evaluate its ability to retrieve information from its context window, not solely its size.

- Meta's Llama 4 Scout, despite a 10 million token context window, was found to suffer from significant "lost in the middle" problems, rendering its large capacity less effective.

"You might have a conversation here where like a third of it is system prompt. You know, a big chunk of it is MCP tools just from a couple of MCP servers and then just a few extra stuff is messages."

Other People Also See